Introducing MCP: A Protocol for Real-World AI Integration

Table of Contents

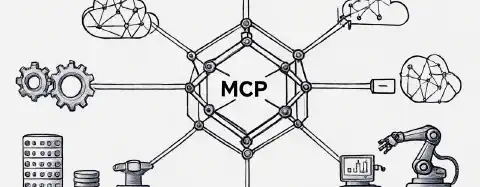

Model Context Protocol (MCP) is an open standard that provides a universal, secure way for AI systems to interact with external data and tools. It simplifies integration by offering a consistent model for connecting AI to the real world.

In this post we will explore why MCP is important, what it actually is and examples of where its already being adopted.

Why Is MCP Needed?

Do you remember when ChatGPT was stuck in the past due to it’s then knowledge cutoff of September 2021? While it was an amazing advancement, it just lacked some superpowers. Other AI applications like Perplexity connected AI with web search, enabling it to be up-to-date and quote sources. The real value add to adding AI to your process comes from access to information or doing something useful, like adding a reminder. In AI agent speak, that is usually referred to as “tool”.

Most of the growing list of AI agent frameworks will allow you to write tools, their way. What MCP does is decouple the tools from the AI agent frameworks. That means you can write a tool once and use it within any of the AI agents that support MCP. That includes MCP support in ChatGPT or VS Code.

If that isn’t enough to convice your boss, you can try quoting from McKinsey’s 2024 research on generative AI adoption. Based on the survey of business leaders, the most useful enabler of future AI adoption is better integration of generative AI into existing systems, cited by 60% of respondents.

How MCP Works: Key Components

MCP follows a simple client-server architecture:

- MCP Hosts are the AI applications themselves — such as Claude, ChatGPT, or AI-powered IDEs like Cursor.

- MCP Clients are protocol handlers running inside the host environment that manage communication between the host and external servers.

- MCP Servers are lightweight processes that expose capabilities like database access, file browsing, or API queries, all via a standard MCP interface.

Each server can expose:

- Tools – Functions the AI can invoke to perform actions.

- Resources – Data objects the AI can browse or retrieve.

- Prompts – Predefined templates or instructions the AI can reuse.

MCP supports the following transport modes:

- Standard input/output (Stdio) — for local, tightly coupled deployments.

- HTTP with Server-Sent Events (SSE) — for remote, scalable connections.

- Streamable HTTP — the latest transport mode, replacing the previous HTTP+SSE combination. It allows for stateless, pure HTTP connections to MCP servers, with an option to upgrade to SSE. This consolidation into a single HTTP endpoint enhances efficiency and simplifies deployment, particularly in serverless or horizontally scalable environments.

This flexibility allows MCP to operate across personal devices, private networks, and cloud-native infrastructure.

Security and Privacy by Design

Connecting AI to external systems raises important privacy and security considerations. While MCP is designed with security in mind, developers should implement best practices such as explicit user consent, data minimization, and clear session boundaries to ensure robust protection.

These principles align with the GDPR and the NIST AI Risk Management Framework, helping developers meet growing regulatory and user expectations.

⚠️ Note: While MCP enforces a structured protocol, it is the responsibility of each server to define strict tool behavior. For example, an insecure tool that exposes file access without restrictions could leak sensitive data like

.envfiles to the model. Always validate inputs, constrain tool capabilities, and avoid exposing unintended data. See the MCP server documentation for best practices.

This approach helps ensure that sensitive data remains protected, even as AI systems become more autonomous and powerful.

A Practical Use Case: AI Assistant for Deployment Monitoring

Imagine a developer using an AI assistant integrated into their IDE. As they work on a feature, they ask the assistant:

“Has this feature been deployed to staging yet?”

Behind the scenes, the assistant queries an MCP server that exposes an internal deployment API. This MCP server abstracts access to deployment status across different environments — staging, production, and so on — and returns a structured response the assistant can interpret.

Because the assistant communicates with a well-defined set of tools and resources through MCP, developers can control exactly what information is exposed and in what format. For example:

- The MCP server provides a tool like

checkDeploymentStatus, scoped only to staging and production. - The AI assistant receives only relevant data, such as deployment timestamps or version numbers.

- All access is governed by permissions declared in the server configuration.

This design enhances clarity, maintainability, and control — the developer doesn’t have to manually wire up custom plugins or expose raw APIs to the AI system. Instead, the MCP layer defines a clean, auditable interface between the assistant and internal infrastructure.

The Growing MCP Ecosystem

MCP adoption is accelerating, with growing community contributions and open-source integrations across platforms and frameworks. The protocol was introduced by Anthropic, who continue to support its development alongside a broader community of developers.

Developers today can already explore a growing set of tools and integrations, including:

- Pre-built MCP servers for common services such as:

- SDKs available in:

- Adapters for AI agent frameworks like:

Getting Started with MCP

For developers interested in exploring MCP:

- Use pre-built servers to connect to common services.

- Build a custom server using the Python or TypeScript SDK.

- Integrate clients into AI workflows using LangChain or LlamaIndex.

- Try out a minimal stateless MCP server implemented with AWS Lambda for lightweight deployments.

Conclusion

MCP offers a secure, standardized way to connect AI systems to the broader world of data and tools. It enables real-time, controlled, and privacy-conscious integrations — critical capabilities as AI becomes more embedded in sensitive and regulated environments.

By bridging the gap between model capabilities and real-world applications, MCP is paving the way for a new generation of useful, safe, and context-aware AI systems.

Just as HTTP standardized the web, MCP may emerge as the protocol that unlocks safe, scalable AI integration across the enterprise.

Next, use the MCP Inspector and connect it to one of the many MCP servers or build your own server. I have written posts covering the official MCP Python SDK as well as FastMCP v2, FastAPI-MCP and Gradio.

Alternatively, put those MCP servers to good use by create your your own AI agent using smolagents.